Modern computer vision applications rely heavily on deep learning models trained in a supervised manner. The training process utilizes input data alongside with their annotations and a cost function. The learning objective is to minimize the difference between model predictions and ground truth annotations. To allow the learning process, human annotators add labels to each data point or image in this process, such as bounding boxes, segmentation masks, or important spots.

Annotated data is used by computer vision algorithms to train models for a variety of tasks, including object detection in images and videos and picture categorization. Machine learning algorithms would struggle to distinguish between various objects and struggle to produce correct predictions without accurate and consistent data annotation. For instance, data annotation in an object identification application can entail identifying items within an image with bounding boxes that specify where each object is in the image.

Types of data annotation techniques

There are different types of data annotation techniques, including:

Bounding Boxes

These are used to identify the location of objects within an image. A bounding box is a rectangular box that is drawn around an object to indicate its location and size.

Segmentation Masks

These are used to identify the exact shape of an object within an image. A segmentation mask is an image that shows the exact boundary of an object within an image.

Keypoints

These are used to identify specific points on an object within an image, such as facial features or joint positions in human pose estimation.

Object Tracking

This involves tracking an object over time in a video or sequence of images. The annotator will mark the object in each frame to enable the machine learning algorithm to track its movement.

Although it can be time-consuming and expensive, data annotation is crucial for building machine learning models that can be used in computer vision applications. Use seasoned annotators and set precise annotation standards and rules to ensure high-quality data annotation.

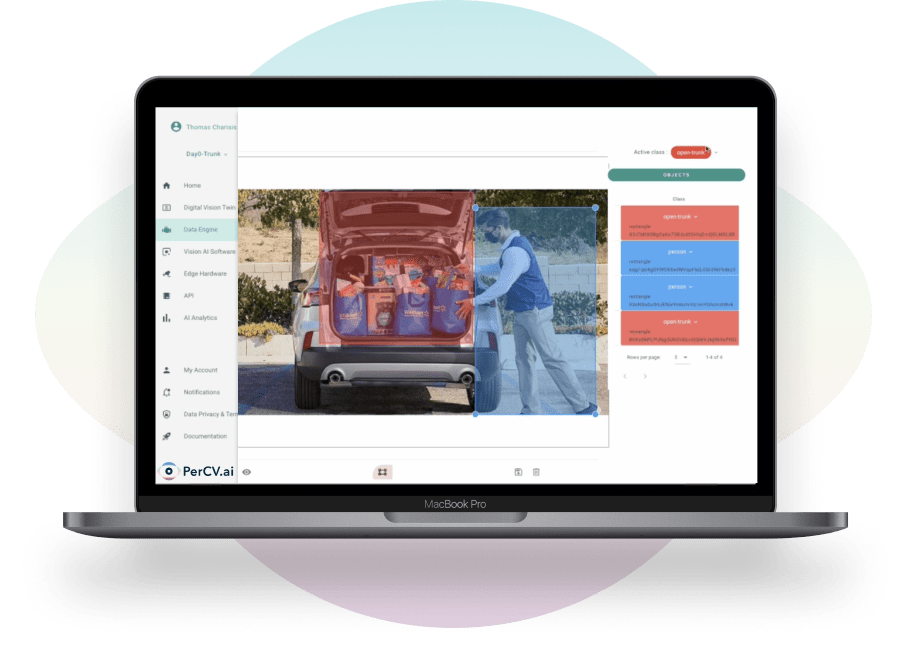

PerCV.ai Data Annotation Tool & Data Engine

Acknowledging the importance of data annotation in the process of building a computer vision solution, PerCV.ai Vision AI Platform comes with a built-in intuitive annotation toolset integrated under its Data Engine features. This way, you don’t have to leave the platform to complete annotation tasks; all the necessary building tools can be found centralized in PerCV.ai platform, supporting managing and processing large amounts of data for scalable deployments.

End-to-end Software & Services Platform

To Deploy Computer Vision & AI at Scale

Of course, this is not the only way to work with data on PerCV.ai platform; you can bring your own data, annotated using any data annotation tool and software of your choice. There are numerous tools available to assist with data annotation, such as Labelbox, RectLabel, VGG Image Annotator, SuperAnnotate, V7, LabelMe, Dataturks, Label Studio, but let’s start with one of our all-time-favourite annotation tool: Computer Vision Annotation Tool (CVAT).

What is CVAT?

CVAT is an open-source web-based tool designed for annotating images and videos. Users can add bounding boxes, polygons, points, and cuboids to annotate photos and videos. Users can upload their data and begin annotating with the tool’s user-friendly interface and simple usage.

The Apache 2.0 license applies to CVAT, which was created by Intel. The tool’s flexibility, adaptability, and scalability make it perfect for use across a wide range of sectors. Additionally, the software is continuously maintained and updated frequently to keep it abreast of the most recent technological advancements.

How does CVAT work?

Users can upload their visual data to CVAT and then annotate it with a variety of annotation tools. Bounding boxes, polygons, points, and cuboids are just a few of the annotation types that the tool offers. Additionally, users can add labels to their annotations, which facilitates the classification of visual data.

Additionally, CVAT offers users collaboration features so that groups can collaborate on huge datasets. Multiple users can collaborate on the same dataset using the application, and any changes made are instantly synchronised with all other users. Teams may easily interact with this tool, ensuring that everyone is on the same page.

Why is CVAT an essential image annotation tool for data scientists?

Data scientists use CVAT because it makes it simple and rapid for them to annotate massive datasets. The tool’s user-friendly design and simple annotation tools make it perfect for non-technical users. Additionally, CVAT offers collaboration capabilities that enable groups to work collaboratively on huge datasets, simplifying the analysis and training of machine learning models.

Due to its open-source status, CVAT is also quite adaptable, enabling users to customize the tool to suit their unique requirements. For businesses with specific needs for their visual data annotation, this capability is extremely helpful.

In conclusion, tools for annotating video and images, like CVAT, have become crucial in a number of disciplines, such as data science, machine learning, and computer vision. CVAT is an open-source, web-based tool that allows users to annotate images and videos quickly and easily. The tool is highly customizable, scalable, and provides collaboration tools, making it ideal for large teams working on large datasets. For those interested in learning more about CVAT, you can visit their website or GitHub page.

CVAT Alternatives

Although CVAT is a well-liked tool for annotating photos and videos, data scientists and researchers should also have a look at some of its rivals and alternatives. Some of them are as follows:

Data scientists and researchers have a variety of CVAT substitutes to choose from. To determine which of these tools best meets your objectives, it is crucial to thoroughly consider their individual features and advantages, while holistically considering the “big picture” and the real-life problem they try to solve.

Data Segmentation: Optimizing Data Annotation for Computer Vision and AI

Data segmentation plays a crucial role in data annotation for machine learning and computer vision AI by helping to identify and isolate objects or regions of interest in an image or video, simplifying the annotation process and improving the accuracy and consistency. In order to make it simpler for annotators to correctly identify and classify these things, this procedure entails breaking up a huge dataset into smaller subsets that contain comparable objects or features.

Data segmentation, for instance, can assist in object recognition by assisting in the identification and separation of distinct things inside an image or video frame, making it simpler for annotators to appropriately annotate and name each object. In a manner similar to semantic segmentation, data segmentation can assist in identifying and classifying various sections or categories in an image, enabling annotators to precisely label each region.

The time and effort needed to annotate the entire dataset can be decreased by segmenting the data so that annotators can concentrate on particular areas or items. This can improve the accuracy and consistency of annotations, while also reducing the overall cost and time required for annotation.

State-of-the-art Segmentation with Segment Anything Model (SAM) by Meta AI

The Segment Anything Model (SAM) is probably familiar to anyone who has ever used a computer vision application for facial recognition or object detection. SAM, a machine learning technique, is employed for object segmentation, which entails locating and classifying items within an image or video. Let’s examine SAM in more detail, including what it is, how it functions, and some of the tools and resources that may be used to include it into machine learning projects.

What is SAM and how does it work?

SAM is a deep neural network architecture that can determine the borders of items within an image by examining the pixel-level properties of each object. It is trained on a dataset of labeled photos. Encoder-decoder networks are often used in this design to recognize objects in the image and distinguish them from the background. By breaking a picture into smaller sections called superpixels and classifying each superpixel as either foreground or background, SAM employs a pixel-wise classification method. The segmented object is created by combining the superpixels that have been designated as foreground.

SAM is a capable object segmentation tool with a wide range of uses. For instance, it can be used to recognize and distinguish distinct items inside an image, find cell borders in photographs of the body, or follow moving things in films.

A GPT For Computer Vision ?

Meta AI has developed a project called “Segment Anything” to democratize segmentation by providing a new task, dataset, and model for image segmentation. The Segment Anything Model (SAM) and the Segment Anything 1-Billion mask dataset (SA-1B) are their solutions to build an accurate segmentation model for various tasks without the need for technical expertise and large volumes of carefully annotated data. SAM is a unified model that executes interactive and automated segmentation tasks effortlessly and can generalize to new types of objects and images because it is trained on a diverse, high-quality dataset of more than 1 billion masks. SA-1B is the largest-ever segmentation dataset, with over 11 million images and 1.1 billion segmentation masks. SAM can transfer to different domains and perform different tasks, including object segmentation, generating valid masks in the face of object ambiguity, and detecting and masking any objects in an image. It is a lightweight model that can run in real-time on a CPU in a web browser, allowing interactive mask annotation in just 14 seconds. SAM has the potential to fuel future applications in a wide variety of fields that require locating and segmenting any object in any given image.

Other tools and resources for implementing SAM

The use of SAM in machine learning projects can be accomplished using a variety of methods and resources. TensorFlow, an open-source library created by Google that offers a variety of tools for developing and deploying machine learning models, is one well-known framework. A pre-trained SAM model is available in TensorFlow that may be utilized for object segmentation tasks.

Another helpful tool is Mask R-CNN, a deep learning model that adds a segmentation branch to the Faster R-CNN object identification model. For instance segmentation, which entails locating and classifying specific objects inside an image or video, Mask R-CNN can be utilized. Mask R-CNN has been demonstrated to produce cutting-edge outcomes on a number of benchmark datasets, including COCO and Cityscapes.

Conclusion

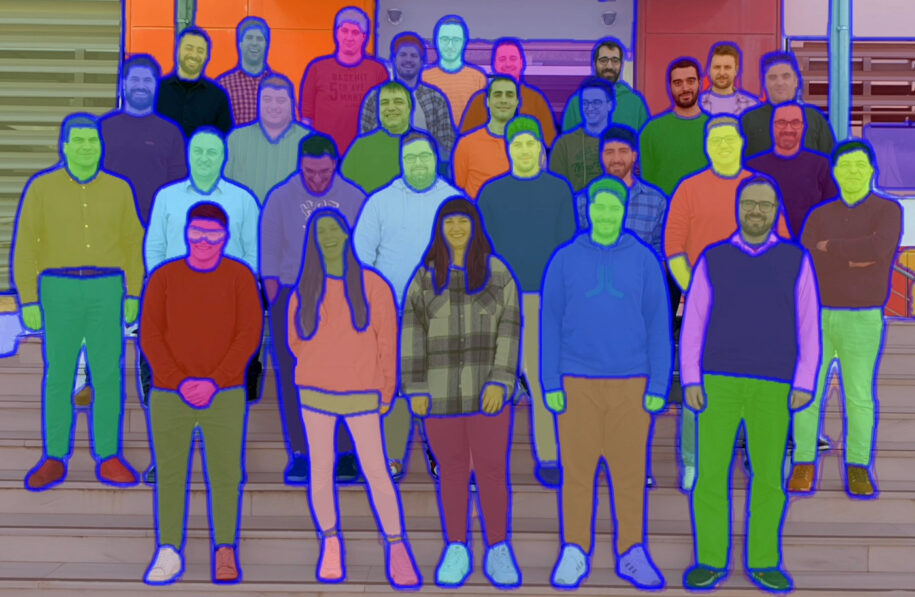

Data Annotation and Image Segmentation tools are evolving with incredible speed, with AI detrimentally transforming this area and opening up a whole new spectrum of capabilities and features. Our PerCV.ai team is tirelessly working to test and adopt the most relevant of those, while staying focused on our mission to provide the best-in-class end-to-end computer vision & AI platform, a complete Vision AI Infrastructure to deploy edge Vision-AI at scale.