A new, radical CNN design approach is introduced, considering the reduction of the total computational load during inference. This is achieved by a new holistic intervention on both the CNN architecture and the training procedure, which targets to the parsimonious inference by learning to exploit or remove the redundant capacity of a CNN architecture. This is accomplished, by the introduction of a new structural element that can be inserted as an addon to any contemporary CNN architecture, whilst preserving or even improving its recognition accuracy.

Our approach formulates a systematic and data-driven method for developing CNNs that are trained to eventually change size and form in real-time during inference, targeting to the smaller possible computational footprint. Results are provided for the optimal implementation on a few modern, high-end mobile computing platforms indicating a significant speed-up of up to x3 times.

Top-performing deep-learning systems usually involve deep and wide architectures and therefore come with the cost of increased storage and computational requirements, while the trend is to continuously increase the depth of the networks. Besides this drawback deep neural networks feature a very intriguing property, which provides opportunities for optimizations regarding both storage space and computations-the redundancy of parameters and computational units (e.g. convolutional kernels). The presence of this redundancy also raises the question of whether a network must be deep or not. But indeed, it is shown that deeper models outperform shallower ones.

At the same time, there is an increasing need to use deep CNNs in applications running on embedded devices. These devices-especially in the Internet of Things (IoT) era- are often equipped with small storage and low computational capabilities. As such they cannot neither store large CNN models nor to cope with the associated computational complexity of a deep and wide CNN. Therefore, it becomes apparent that, in order to make CNNs appealing for mobile devices, both the parameter size and the number of kernels need to be reduced. But then, a fundamental dilemma becomes apparent.

Can we design computationally efficient CNNs and at the same time to maintain recognition accuracy and generalization, while keep the overall structure efficient for operating on mobile embedded devices?

To answer the above-mentioned dilemma, in Irida Labs, a systematic way has been developed for implementing CNN variants that are parsimonious in computations. To this end, the proposed approach allows us to contract and train a CNN to:

The proposed method is compatible with any contemporary deep CNN architecture and can be used in combination with other model thinning approaches (optimal filtering, factorization, etc.) resulting into a further processing optimization.

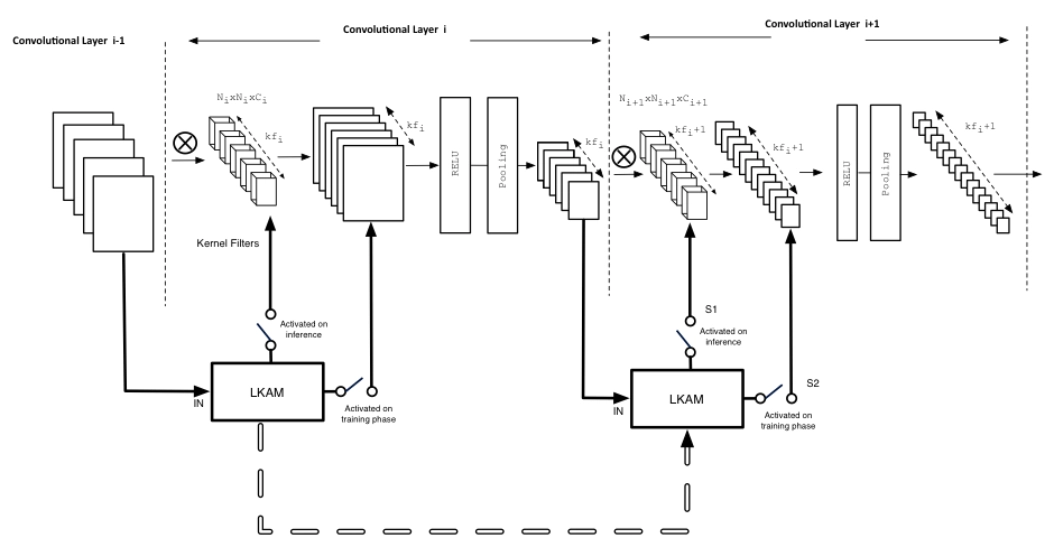

The idea is presented in the following Figure. In this figure, a part of a typical convolutional network is shown, depicting only the i-th and the (i+1)-th convolutional layer. A Learning Kernel-Activation Module (LKAM) is introduced, linking two consecutive convolutional layers. This learning switch module is capable to switch on and off individual kernels of any layer depending on its input, which is the output of the previous convolutional layer.

The module learns which kernel to disable during the CNN training process, which is for that reason specifically devised to facilitate such operation by exploiting data sparsity usually employed in images.

Continue reading about the full details of the approach, the Implementation and the Simulation we conducted as well as the Evaluation for the results.

This paper by I. Theodorakopoulos, V. Pothos, D. Kastaniotis and N. Fragoulis has been published on January, 2017.

| Cookie | Duration | Description |

|---|---|---|

| __cf_bm | 30 minutes | This cookie, set by Cloudflare, is used to support Cloudflare Bot Management. |

| bcookie | 1 year | LinkedIn sets this cookie from LinkedIn share buttons and ad tags to recognize browser ID. |

| bscookie | 1 year | LinkedIn sets this cookie to store performed actions on the website. |

| lang | session | LinkedIn sets this cookie to remember a user's language setting. |

| li_gc | 5 months 27 days | Linkedin set this cookie for storing visitor's consent regarding using cookies for non-essential purposes. |

| lidc | 1 day | LinkedIn sets the lidc cookie to facilitate data center selection. |

| UserMatchHistory | 1 month | LinkedIn sets this cookie for LinkedIn Ads ID syncing. |

| Cookie | Duration | Description |

|---|---|---|

| SRM_B | 1 year 24 days | Used by Microsoft Advertising as a unique ID for visitors. |

| Cookie | Duration | Description |

|---|---|---|

| _clck | 1 year | Microsoft Clarity sets this cookie to retain the browser's Clarity User ID and settings exclusive to that website. This guarantees that actions taken during subsequent visits to the same website will be linked to the same user ID. |

| _clsk | 1 day | Microsoft Clarity sets this cookie to store and consolidate a user's pageviews into a single session recording. |

| _ga | 2 years | The _ga cookie, installed by Google Analytics, calculates visitor, session and campaign data and also keeps track of site usage for the site's analytics report. The cookie stores information anonymously and assigns a randomly generated number to recognize unique visitors. |

| _ga_W6E27R14NE | 2 years | This cookie is installed by Google Analytics. |

| _gat_UA-156119957-1 | 1 minute | A variation of the _gat cookie set by Google Analytics and Google Tag Manager to allow website owners to track visitor behaviour and measure site performance. The pattern element in the name contains the unique identity number of the account or website it relates to. |

| _gcl_au | 3 months | Provided by Google Tag Manager to experiment advertisement efficiency of websites using their services. |

| _gid | 1 day | Installed by Google Analytics, _gid cookie stores information on how visitors use a website, while also creating an analytics report of the website's performance. Some of the data that are collected include the number of visitors, their source, and the pages they visit anonymously. |

| AnalyticsSyncHistory | 1 month | Linkedin set this cookie to store information about the time a sync took place with the lms_analytics cookie. |

| attribution_user_id | 1 year | This cookie is set by Typeform for usage statistics and is used in context with the website's pop-up questionnaires and messengering. |

| CLID | 1 year | Microsoft Clarity set this cookie to store information about how visitors interact with the website. The cookie helps to provide an analysis report. The data collection includes the number of visitors, where they visit the website, and the pages visited. |

| CONSENT | 2 years | YouTube sets this cookie via embedded youtube-videos and registers anonymous statistical data. |

| nQ_cookieId | 1 year | Albacross sets this cookie to help identify companies for better lead generation and more effective ad targeting. |

| undefined | never | Wistia sets this cookie to collect data on visitor interaction with the website's video-content, to make the website's video-content more relevant for the visitor. |

| Cookie | Duration | Description |

|---|---|---|

| ANONCHK | 10 minutes | The ANONCHK cookie, set by Bing, is used to store a user's session ID and also verify the clicks from ads on the Bing search engine. The cookie helps in reporting and personalization as well. |

| MUID | 1 year 24 days | Bing sets this cookie to recognize unique web browsers visiting Microsoft sites. This cookie is used for advertising, site analytics, and other operations. |

| test_cookie | 15 minutes | The test_cookie is set by doubleclick.net and is used to determine if the user's browser supports cookies. |

| VISITOR_INFO1_LIVE | 5 months 27 days | A cookie set by YouTube to measure bandwidth that determines whether the user gets the new or old player interface. |

| YSC | session | YSC cookie is set by Youtube and is used to track the views of embedded videos on Youtube pages. |

| yt-remote-connected-devices | never | YouTube sets this cookie to store the video preferences of the user using embedded YouTube video. |

| yt-remote-device-id | never | YouTube sets this cookie to store the video preferences of the user using embedded YouTube video. |

| yt.innertube::nextId | never | This cookie, set by YouTube, registers a unique ID to store data on what videos from YouTube the user has seen. |

| yt.innertube::requests | never | This cookie, set by YouTube, registers a unique ID to store data on what videos from YouTube the user has seen. |

| Cookie | Duration | Description |

|---|---|---|

| _bc_uuid | 10 years 3 months 16 days 18 hours | No description available. |

| AWSALBTG | 7 days | No description available. |

| AWSALBTGCORS | 7 days | No description available. |

| debug | never | No description available. |

| DEVICE_INFO | 5 months 27 days | No description |

| ln_or | 1 day | No description |

| loglevel | never | No description available. |

| nQ_userVisitId | 30 minutes | No description available. |

| prism_610420756 | 1 month | No description |

| rl_anonymous_id | never | No description available. |

| rl_user_id | never | No description available. |

| session_referrer | 30 minutes | No description |

| SM | session | No description available. |

| tf_respondent_cc | 6 months | No description |