Goals and Objectives

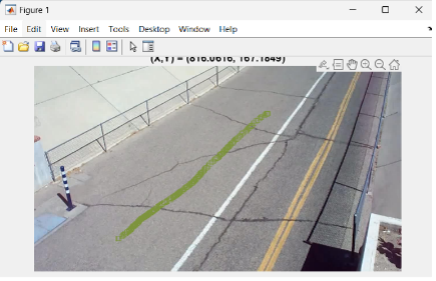

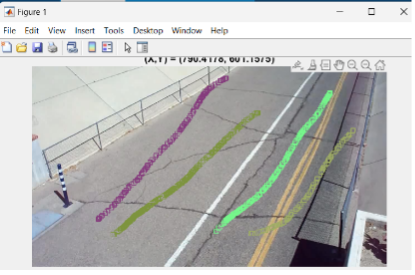

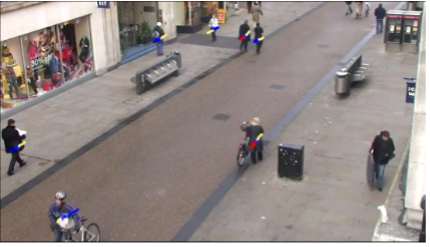

Create a complete set of data for different “smart city” and “smart surveillance” scenarios, which will be captured in real-world conditions from sensors in different locations and with different environmental conditions.

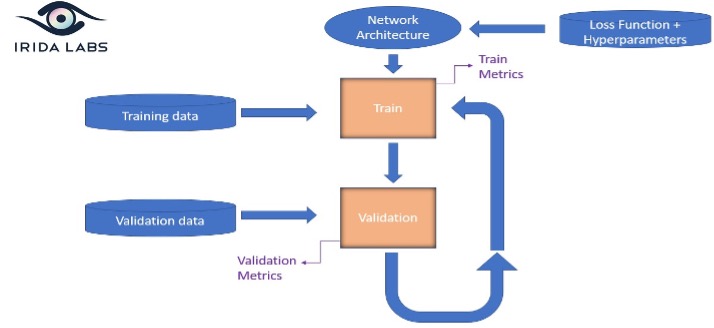

Develop machine vision technology based on artificial intelligence and deep learning that will be trained with real-time data as well as with existing data sets.

Exploit of deep learning techniques and IRIDA’s know-how in training of neural network modules and real-time implementation of deep learning architectures for commercial purposes, which have been gained through collaboration with a companies such as Qualcomm, Analog Devices, Arrow Electronics, Cadence and Xilinx.

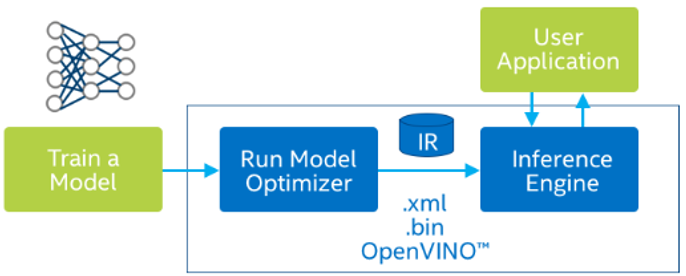

Pilot implementation of the functionalities in embedded systems (edge devices) using heterogeneous computing techniques to optimally exploit available computing resources such as CPU, GPU and DSP.