Artificial intelligence (AI) and machine learning (ML) have revolutionized computer vision, enabling systems to identify, classify, and interpret visual data in ways that were once impossible. However, the accuracy and effectiveness of these systems are highly dependent on the quality and quantity of the training data used to train them. Collecting and labeling real-world data for computer vision is a significant challenge that necessitates a significant investment of resources, expertise, and time. Inaccuracies or biases in training data can lead to inaccurate or biased AI models, which can have negative consequences in real-world applications. This post will go over the importance of training data for computer vision applications, the challenges of collecting and labeling real-world data, and best practices for ensuring training data quality and accuracy. We’ll also talk about how synthetic data can be a useful tool for training and testing AI models.

Index

- Challenges in collecting and labelling real-world data for Computer Vision

- Training Computer Vision: quality and accuracy best practices

- Synthetic Data for Computer Vision Artificial Intelligence

- Advantages of Synthetic Data

- Challenges of Synthetic Data

- Tools and Methods for Generating Synthetic Data

- Photorealism - Bringing Virtual Worlds to Life

- Photorealism Tools

- 2D embeddings maps

- The Role of Generative Models in Synthetic Data for Computer Vision

- Synthetic Data & Photorealism for car licence plates training dataset

- Data Management with PerCV.ai Platform

- When things go wrong - Computer Vision failures

Challenges in collecting and labelling real-world data for Computer Vision

Collecting and labeling real-world data for computer vision applications can be a daunting task that involves a variety of challenges, including:

Overcoming these challenges requires careful planning, expertise, and resources to ensure the quality, accuracy, and representativeness of the training data.

Training Computer Vision: quality and accuracy best practices

It is critical for the effectiveness and fairness of AI models to ensure the quality and accuracy of training data in computer vision applications. Defining clear data collection and labeling protocols, using diverse and representative datasets, ensuring labeling accuracy, mitigating bias, and monitoring and updating the dataset over time are all best practices.

Here are some best practices for collecting and labeling training data in computer vision applications including insightful examples to help us understand the scale :

Define clear data collection and labeling protocols

Establishing clear protocols for data collection and labeling can help ensure consistency and accuracy. These protocols should include guidelines for data quality, labeling standards, and any relevant ethical considerations.

ImageNet dataset, which contains over 14 million images with labels, has a detailed protocol for data collection and labeling to ensure consistency and accuracy.

Ensure labeling accuracy

Labeling accuracy is critical for the effectiveness of the AI model. To ensure labeling accuracy, multiple annotators should be used, and inter-annotator agreement should be measured. This can help identify and resolve labeling inconsistencies and ambiguities.

The Open Images dataset, which contains over 9 million images with labels, uses multiple annotators to ensure labeling accuracy.

Mitigate bias

Bias in training data can lead to biased AI models, resulting in unfair or discriminatory outcomes. To mitigate bias, it is essential to identify and address potential sources of bias in the data collection and labeling process. This includes assessing the representativeness of the dataset and identifying and mitigating societal biases.

The AffectNet dataset, which contains over 1 million facial images labeled with emotions, has measures in place to mitigate gender and racial biases.

Use diverse and representative datasets

Collecting a diverse and representative dataset is crucial to ensure that the AI model can generalize to real-world scenarios. This includes capturing different viewpoints, lighting conditions, and occlusions.

The COCO dataset, which contains over 330,000 images with 80 object categories, has been widely used for object detection and segmentation tasks due to its diversity and representativeness.

Monitor and update the dataset

Computer vision applications are continually evolving, and the dataset must be monitored and updated to ensure it remains relevant and effective. This includes adding new data to improve the diversity and representativeness of the dataset and re-evaluating and updating labeling standards.

The MS-COCO dataset is continually updated to include new images and annotations, reflecting the evolution of computer vision applications.

Discover the power of data annotation for computer vision in our latest blog post. Learn about techniques and tools, including the groundbreaking Segment Anything Model by Meta AI. Optimize your Vision AI product development with PerCV.ai Platform.

Synthetic Data for Computer Vision Artificial Intelligence

Synthetic data is data that is generated artificially and can be used to train computer vision artificial intelligence models. Synthetic data, which can be generated using a variety of methods such as render engines, simulation software, and generative models, has emerged as a potential solution to the challenges of collecting and labeling real-world data for computer vision applications. Researchers can generate large amounts of labeled data quickly and cheaply using synthetic data, making it easier to train AI models. Below are some ways that synthetic data can be a solution for the training of computer vision artificial intelligence.

Advantages of Synthetic Data

Cost-effectiveness

Collecting and labeling real-world data can be costly. Synthetic data can be generated at a fraction of the cost, making it a cost-effective solution for training AI models

Easy labeling

Labeling real-world data can be challenging and time-consuming, particularly for complex scenarios. Synthetic data can be generated with pre-defined labels, simplifying the labeling process and reducing the potential for labeling errors

Scalability

Collecting and labeling large amounts of real-world data for computer vision applications can be time-consuming and expensive. Synthetic data can be generated quickly and in large quantities, making it a scalable solution for training AI models

Privacy

Real-world data may contain sensitive or private information that needs to be protected. Synthetic data can be generated without any real-world identifiers, ensuring the privacy of individuals while still providing realistic training data for AI models. For example, medical data is often highly sensitive and subject to strict privacy laws. By generating synthetic medical data, researchers can protect patient privacy while still training AI models to diagnose and treat medical conditions

Diversity

Synthetic data can be generated to represent a diverse range of scenarios, including rare or dangerous situations that may be difficult or impossible to capture with real-world data. This diversity can help improve the robustness of the AI model and enable it to generalize to new scenarios

Flexibility

Synthetic data can be generated for scenarios that are difficult or dangerous to replicate in the real world. For example, in the field of autonomous driving, synthetic data can be used to train AI models to recognize and react to rare and dangerous driving situations, such as accidents or extreme weather conditions

Challenges of Synthetic Data

While synthetic data has several advantages for training computer vision AI models, it also has some limitations, including:

- Representativeness: It is essential to ensure that synthetic data accurately reflects the real-world scenarios that the AI model will encounter. If the synthetic data is not representative of the real-world data, then the AI model may not perform well in practice.

- Generalization: AI models trained on synthetic data may not generalize well to new scenarios. This is because the synthetic data may not capture all the nuances and complexities of the real world. To mitigate this risk, researchers need to carefully design and validate the synthetic data, and test the AI model on a variety of real-world scenarios.

- Validation: Synthetic data needs to be carefully designed and validated to ensure its quality and effectiveness.

Despite these challenges, synthetic data is becoming an increasingly important research tool in AI and machine learning. Synthetic data can help researchers train more accurate and robust AI models by quickly and cheaply generating large amounts of labeled data. We can expect to see more widespread use of synthetic data in the development of AI applications as AI technology advances.

Tools and Methods for Generating Synthetic Data

Synthetic data is a powerful tool for training and testing AI models. It offers several advantages, including cost-effectiveness, flexibility, privacy, and diversity. However, synthetic data also presents challenges, such as representativeness, generalization, and validation. Various tools and methods are available for generating synthetic data, including computer graphics, simulation software, and generative models. As AI technology continues to advance, synthetic data is likely to play an increasingly important role in the development of AI applications. Several tools and methods are available for generating synthetic data. Here are a few examples:

Render engines

Render engines software can be used to create synthetic images and videos for training AI models. Some popular tools include Blender, Unity, and Unreal Engine.

Simulation software

Simulation software can be used to generate synthetic data for scenarios that are difficult or dangerous to replicate in the real world. Some examples of simulation software include Gazebo, CARLA, and MuJoCo.

Generative models

Generative models, such as Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), can be used to generate synthetic data that mimics real-world data. These models have been used for applications such as image generation and natural language processing.

Photorealism - Bringing Virtual Worlds to Life

Photorealism refers to the degree to which synthetic data mimics the appearance of real-world objects or scenes. In the context of computer vision, photorealism is essential to ensure that the synthetic data accurately represents real-world scenarios and that AI models trained on the data can generalize to new, unseen scenarios.

The more photorealistic the synthetic data, the more closely it resembles real-world data, which can improve the accuracy and robustness of AI models. Photorealism can be achieved through several techniques, such as using realistic textures and lighting, modeling objects with accurate physical properties, and incorporating environmental factors like shadows and reflections.

Photorealism can improve synthetic data in several ways:

Overall, photorealism is an essential aspect of generating high-quality synthetic data for computer vision applications. It can improve the accuracy and robustness of AI models, reduce biases, and provide a cost-effective alternative to collecting and labeling real-world data.

Photorealism Tools

Below are some examples of tools and platforms that can be used for photorealistic rendering. It comes without saying that each tool has its own strengths and weaknesses, and the choice of tool will depend on the specific needs of the project. Here are our favourite ones:

Unity

Unity is a game engine that can be used to create photorealistic 3D environments and objects. It includes a range of tools and features for creating realistic lighting, textures, and physics simulations.

Unreal Engine

Unreal Engine is another game engine that is commonly used for creating photorealistic environments and objects. It includes features like real-time rendering, advanced lighting, and physics simulation.

Blender

Blender is a 3D modeling and animation software that is widely used for creating photorealistic objects and scenes. It includes a range of features for creating realistic textures, lighting, and physics simulations.

Maya

Maya is another 3D modeling and animation software that is commonly used for creating photorealistic objects and scenes. It includes features like advanced lighting, texture creation, and physics simulation.

SketchUp

SketchUp is a 3D modeling software that is popular for creating photorealistic architectural and interior design models. It includes features for creating realistic lighting, materials, and textures.

Adobe Substance

Adobe Substance is a suite of tools for creating photorealistic textures and materials. It includes a range of features for creating realistic materials like wood, metal, and fabric.

The Role of Generative Models in Synthetic Data for Computer Vision

Another promising approach for addressing the challenges associated with training data for computer vision is the use of generative models such as GANs (Generative Adversarial Networks) and diffusion models. These models use deep learning algorithms to synthesise new high-quality data that is similar to real-world data, allowing researchers to create synthetic datasets that can be used to train AI models in computer vision applications.

In the context of training data for computer vision and synthetic data, generative models like GANs and diffusion models can be used to create synthetic data that is photorealistic and visually indistinguishable from real-world data. This can help address some of the challenges associated with collecting and labeling real-world data, such as the high cost and difficulty of obtaining large amounts of high-quality data.

By using generative models to synthesize data, researchers can quickly generate large datasets that are representative of real-world scenarios, allowing them to train AI models that are robust and accurate. Additionally, these models can be fine-tuned to generate data that is specific to a particular domain or task, allowing researchers to create custom datasets that are tailored to their specific needs. The use of such models improves the overall accuracy and robustness of AI models, leading to better performance in real-world scenarios.

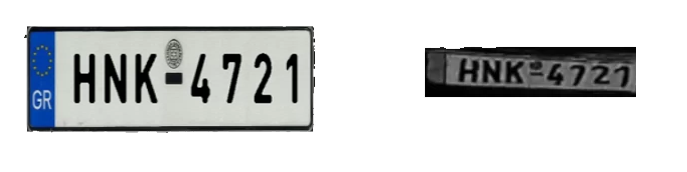

Synthetic Data & Photorealism for car licence plates training dataset

Our team here at Irida Labs has used a combination of computer graphics, machine learning, and photorealism to create synthetic license plate images that were visually indistinguishable from real-world license plate images. This is an example that demonstrates the potential of synthetic data and photorealism for generating high-quality training data for computer vision applications for Smart Cities and Spaces.

To create the synthetic data, our Data Scientist/ML team first modeled the license plate geometry and text using computer graphics. They then used machine learning algorithms to generate realistic textures and lighting conditions for the license plate. Finally, they applied a photorealistic rendering process to the synthetic license plate image to make it look like a real-world image.

A dataset of real-world license plate images was used to train the machine learning algorithms and validate the quality of the synthetic data. The team found that their synthetic data was able to improve the accuracy of license plate recognition algorithms when used in combination with real-world data.

One advantage of using synthetic data for license plate recognition is that it allows the generation of large amounts of training data quickly and inexpensively, without the need for manually labeling real-world images. Additionally, the photorealistic rendering process used ensured that the synthetic data was visually indistinguishable from real-world images, making it useful for training AI models that need to operate in real-world scenarios.

Data Management with PerCV.ai Platform

PerCV.ai, our end-to-end software & services Vision AI platform, offers an entire suite of tools for centralised data management called the Data Engine.

Within the Data Engine, the data can get stored, organized and shared with your team. A comprehensive annotation tool is at your disposal if manual image annotation is required, however, already-annotated datasets can be also uploaded.

Generation of Synthetic Data is another powerful option available to PerCV.ai users.

Irida Labs USPTO-patented methodology for annotating unlabelled images using convolutional neural networks.

Deploy Computer Vision & AI at Scale

Start building your own Vision AI solution, or get more value with our custom options & services

When training goes wrong - Computer Vision failures

There have been several instances where computer vision systems have failed due to inadequate or biased training data. Here are some examples that demonstrate the importance of training data in computer vision applications and the potential consequences of inadequate or biased data:

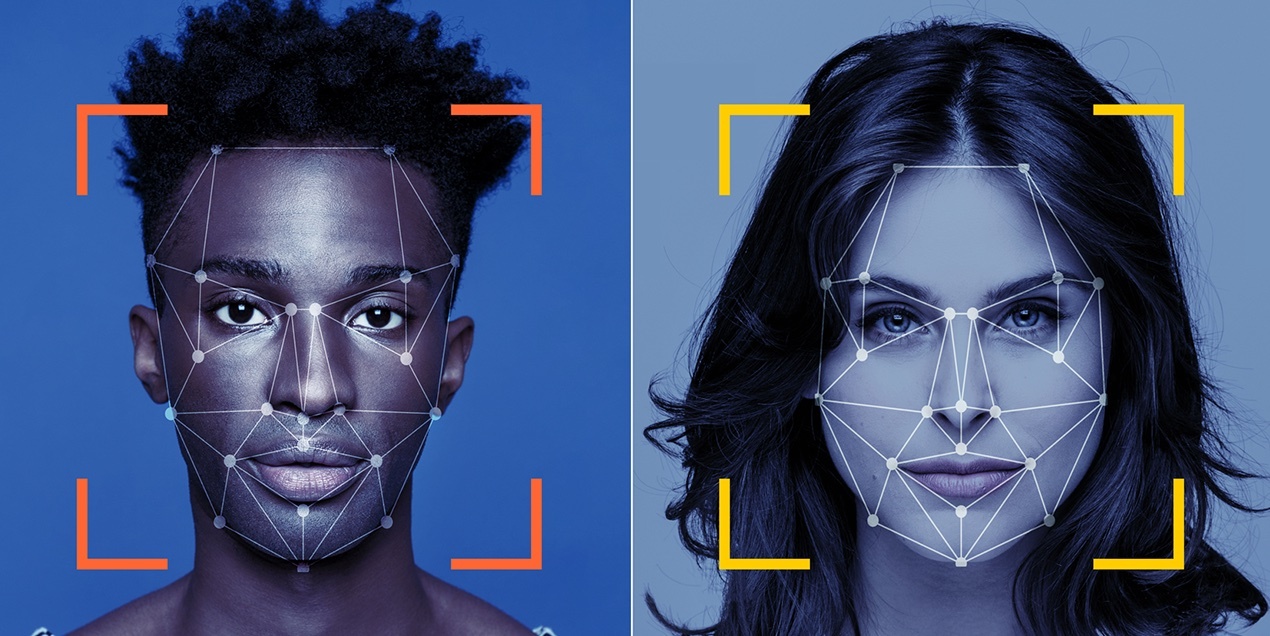

Facial recognition bias

In 2018, researchers found that several commercially available facial recognition systems exhibited significant biases against certain demographic groups, including people with darker skin and women. This was attributed to the lack of diversity in the training data used to train the AI models.

Autonomous vehicle accidents

In 2018, an autonomous vehicle operated by Uber struck and killed a pedestrian in Arizona. An investigation found that the system failed to recognize the pedestrian due to inadequate training data, including insufficient data on pedestrians outside of crosswalks.

Amazon's gender bias

In 2018, it was discovered that Amazon’s AI recruiting system exhibited bias against women. The system was trained on resumes submitted to Amazon over a ten-year period, which were predominantly from men. As a result, the system learned to penalize resumes that included words associated with women.

Google Photos label errors

In 2015, Google Photos was criticized for labeling images of black people as “gorillas.” The error was attributed to the lack of diversity in the training data used to train the image recognition system.

2D Embeddings maps

2D embedding maps are a visualization technique used to assess the quality of data in a high-dimensional space. They project the high-dimensional data onto a 2D plane in a way that preserves the relationships between the data points. In other words, similar data points are placed closer together on the 2D map, while dissimilar data points are placed farther apart.

In the context of photorealism, synthetic data, and data quality for computer vision, 2D embedding maps can be used to evaluate the photorealism and overall quality of the synthetic data. The maps can provide a visualization of how similar or dissimilar the synthetic data is to the real-world data, which can help researchers identify areas where the synthetic data may need improvement.

For example, if a 2D embedding map shows that the synthetic data is clustered together in a different way than the real-world data, it may indicate that the synthetic data does not accurately represent the variability in the real-world data. In contrast, if the synthetic data is closely clustered with the real-world data, it may indicate that the synthetic data is photorealistic and accurately represents the real-world scenarios.

By using 2D embedding maps, researchers can quickly evaluate the quality of the synthetic data and make adjustments to improve the photorealism and overall quality. This can help ensure that the AI models trained on the synthetic data are accurate and robust, leading to better performance in real-world scenarios.